AI-driven impersonation scams are becoming one of the most dangerous forms of modern social engineering. By combining artificial intelligence with publicly available data, attackers can convincingly impersonate real people, roles, or organizations—often without raising immediate suspicion.

These scams go beyond simple deception. They replicate identity cues, communication styles, and behavioral patterns that victims associate with legitimacy. This article explains how AI-driven impersonation scams work, why they are effective, and what risks they pose as AI-generated identity abuse accelerates.

Quick Navigation

What Are AI-Driven Impersonation Scams?

AI-driven impersonation scams involve using AI tools to mimic the identity of a real person or trusted entity.

These scams may imitate:

-

Executives or managers

-

Colleagues or family members

-

Customer support agents

-

Public figures or brands

The goal is not just to look legitimate, but to behave legitimately.

How AI Enables Convincing Impersonation

AI allows attackers to reproduce identity signals that humans trust.

Key capabilities include:

-

Voice cloning from short samples

-

Writing style imitation

-

Context-aware conversational responses

-

Timing messages to match real behavior

These capabilities remove many of the inconsistencies that once exposed impersonation.

Common Scenarios for AI-Driven Impersonation

Impersonation scams powered by AI often appear in:

-

Urgent financial requests from “executives”

-

Emergency messages from “family members”

-

Support chats posing as legitimate services

-

Internal requests mimicking workplace language

These scenarios exploit authority, familiarity, and urgency simultaneously.

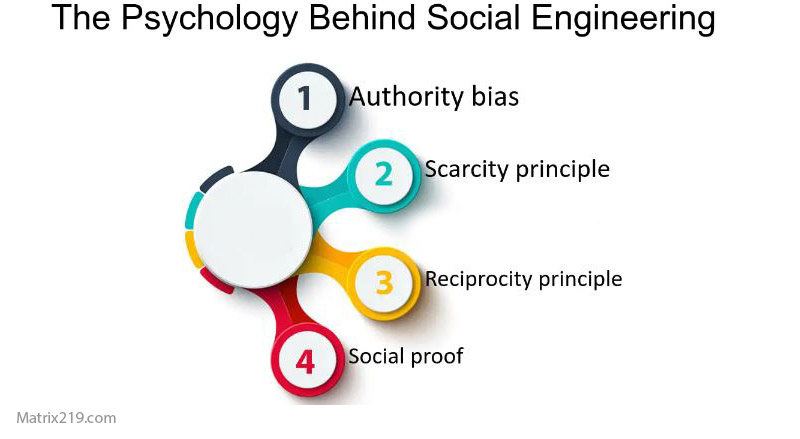

Why AI-Driven Impersonation Is So Effective

Impersonation works because identity is a shortcut for trust.

Victims are more likely to comply when:

-

The identity feels familiar

-

The tone matches expectations

-

The request fits a believable situation

These psychological dynamics align with patterns explained in The Psychology Behind Social Engineering Attacks

psychology behind social engineering attacks

The Role of Data in Impersonation Accuracy

AI-driven impersonation depends heavily on data.

Attackers use:

-

Public profiles and posts

-

Leaked personal information

-

Past communications

-

Organizational context

This profiling phase reflects techniques described in How Attackers Profile Victims Using Public Information

How These Scams Bypass Security Controls

AI-driven impersonation avoids technical indicators.

They succeed by:

-

Using legitimate channels

-

Triggering voluntary actions

-

Avoiding malware or exploits

This reinforces how manipulation bypasses defenses, as discussed in How Social Engineering Attacks Bypass Technical Security

When Impersonation Escalates Into Major Incidents

Successful impersonation can lead to:

-

Unauthorized payments

-

Data exposure

-

Account takeover

-

Long-term access

In organizational settings, a single impersonation event can trigger cascading damage.

Early Warning Signs of AI-Based Impersonation

Even advanced scams may show subtle indicators:

-

Requests to bypass verification

-

Pressure to act secretly

-

Slight inconsistencies in context

-

Unusual urgency from familiar contacts

These overlap with signals discussed in Common Social Engineering Red Flags Most Users Miss

How Organizations Can Reduce Impersonation Risk

Defense must focus on verification, not recognition.

Effective safeguards include:

-

Mandatory call-back or out-of-band checks

-

Clear rules for authority-based requests

-

Separation of duties

-

Treating identity claims as untrusted input

AI increases impersonation quality—but process neutralizes it.

External Perspective on AI-Driven Impersonation

Cybersecurity research increasingly warns that AI-powered impersonation is accelerating fraud and social engineering impact, as reflected in Europol Identity Abuse and AI Threat Reports

Frequently Asked Questions (FAQ)

What makes AI-driven impersonation scams different?

They replicate behavior and identity cues, not just names or logos.

Do these scams always use deepfakes?

No. Many rely on text and behavioral imitation alone.

Are organizations more at risk than individuals?

Organizations face higher impact due to authority and access.

Can technical tools reliably detect impersonation?

Not consistently. Verification processes are more reliable.

What is the strongest defense?

Mandatory verification regardless of identity familiarity.

Conclusion

AI-driven impersonation scams represent a shift from pretending to being someone in the eyes of the victim. By replicating identity cues at scale, attackers erode one of the most fundamental trust mechanisms humans rely on.

Defending against this threat requires abandoning the assumption that identity equals legitimacy. In an AI-driven environment, verification—not familiarity—must guide every sensitive decision.