Artificial intelligence has reshaped how cyber-attacks are planned and executed, and social engineering is no exception. What once relied on manual research and generic messages is now powered by automation, data analysis, and machine-generated content that closely mirrors human behavior.

Understanding how AI is transforming social engineering attacks helps explain why modern scams feel more personal, more convincing, and harder to detect. This article explores how attackers use AI, what has changed compared to traditional techniques, and why these changes increase risk for individuals and organizations alike.

Quick Navigation

From Manual Deception to Automated Manipulation

Traditional social engineering depended on time-consuming research and trial-and-error messaging. AI has removed many of those limitations.

Attackers can now:

-

Generate thousands of tailored messages instantly

-

Analyze public data at scale

-

Adjust tactics based on victim responses

This shift increases speed, reach, and precision without increasing effort.

The Role of Data Analysis in Modern Attacks

AI systems excel at identifying patterns in large datasets. When applied to social engineering, this enables attackers to:

-

Profile victims using social media and public records

-

Identify emotional triggers and interests

-

Choose optimal timing and communication style

This evolution builds on techniques discussed in How Attackers Profile Victims Using Public Information but operates at far greater scale.

Language Models and Message Realism

One of the most visible changes is message quality.

AI-generated content:

-

Uses natural grammar and tone

-

Adapts to cultural and professional context

-

Avoids the mistakes users were trained to spot

As a result, many traditional “red flags” are no longer reliable indicators of deception.

Why Personalization Has Become the Default

AI allows attackers to personalize messages automatically rather than selectively.

Personalized attacks feel:

-

Relevant

-

Familiar

-

Timely

This increases trust and response rates, reinforcing psychological dynamics explained in The Psychology Behind Social Engineering Attacks

Faster Adaptation During Active Attacks

AI enables real-time adjustment.

During an ongoing campaign, attackers can:

-

Modify messages based on replies

-

Change tone if resistance is detected

-

Escalate urgency dynamically

This adaptability mirrors conversational manipulation rather than static messaging.

Reduced Technical Barriers for Attackers

Previously, advanced social engineering required skill and experience. AI lowers that barrier.

Now:

-

Non-experts can generate convincing scams

-

Complex targeting no longer requires deep knowledge

-

Attack quality is less dependent on attacker skill

This expands the pool of capable attackers significantly.

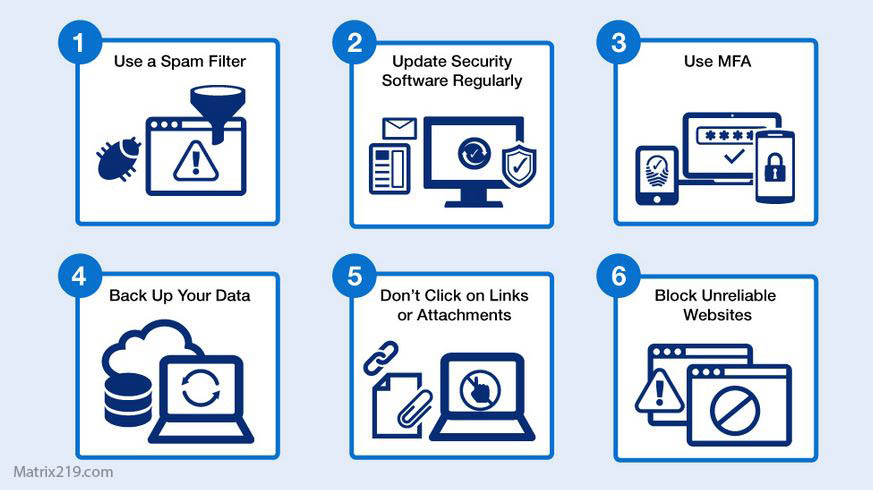

Why Detection Becomes More Difficult

Many security tools rely on patterns, repetition, and known indicators. AI-driven attacks intentionally avoid those signals.

Challenges include:

-

Unique messages per target

-

Legitimate-looking language

-

Use of trusted platforms

This reinforces limitations discussed in Phishing Detection Tools Compared

Human Judgment Under AI-Driven Pressure

When messages feel authentic and contextual, users are more likely to rely on instinct rather than verification.

AI does not exploit technology first—it exploits decision-making speed, trust, and fatigue. This is why human factors remain central, as discussed in Why Humans Are the Weakest Link in Cybersecurity

External Perspective on AI and Social Engineering

Cybersecurity research increasingly recognizes that AI amplifies existing manipulation techniques rather than replacing them, a trend highlighted in NIST AI Risk Management Framework

Frequently Asked Questions (FAQ)

Does AI replace traditional social engineering techniques?

No. It enhances and scales them rather than replacing the core concepts.

Are AI-driven attacks fully automated?

Often partially automated, with humans guiding strategy and targets.

Can users detect AI-generated scams?

It is becoming harder, especially without contextual verification.

Does AI make every attack sophisticated?

No. Many attacks remain simple, but average quality has increased.

Is AI more dangerous than previous tools?

It increases scale and realism, which raises overall risk.

Conclusion

How AI is transforming social engineering attacks becomes clear when examining speed, personalization, and realism. AI does not invent new manipulation principles—it accelerates and refines existing ones.

Defending against these attacks requires moving beyond static indicators and focusing on verification, process design, and awareness of how AI changes attacker capabilities. As technology evolves, so must our understanding of trust and decision-making in digital environments.