Chatbots social engineering attacks are becoming one of the most effective manipulation techniques in modern cybercrime. By using automated conversational tools, attackers can simulate real human interaction, build trust gradually, and influence victims without triggering traditional security alarms.

Unlike classic phishing messages, chatbot-driven social engineering relies on dialogue, responsiveness, and emotional engagement. This makes the attack feel natural rather than suspicious. Understanding how chatbots are used for social engineering is critical for recognizing modern scams and reducing the risks they pose to individuals and organizations.

Quick Navigation

What Is Chatbots Social Engineering?

Chatbots Social Engineering Explained Clearly

Chatbots social engineering refers to the use of automated conversational agents to manipulate users into sharing information, granting access, or taking unsafe actions.

These attacks:

-

Mimic real conversations

-

Respond dynamically to user input

-

Gradually guide victims toward harmful decisions

Instead of a single deceptive message, the manipulation unfolds over time.

How Social Engineering Chatbots Differ From Traditional Scams

Traditional scams rely on static scripts. Chatbot-based manipulation is interactive.

Key differences include:

-

Continuous engagement instead of one message

-

Adaptive responses based on user behavior

-

Reduced reliance on links or attachments

This shift makes detection harder and success rates higher.

Common Chatbots Social Engineering Attack Scenarios

Where Automated Social Engineering Chatbots Appear

Chatbot-driven attacks often appear in environments where conversation feels expected:

-

Fake customer support chats

-

Online investment platforms

-

Recruitment and job portals

-

Dating and relationship platforms

Because users already expect automation, suspicion is lower.

How Attackers Build Social Engineering Chatbots

Social Engineering Chatbot Design Process

Most attackers follow a structured approach:

-

Create a believable chatbot persona

-

Script initial neutral interactions

-

Introduce helpful or emotional engagement

-

Gradually steer the conversation toward risk

The targeting stage often relies on techniques described in How Attackers Profile Victims Using Public Information

The Role of AI in Chatbots Social Engineering

Modern language models allow chatbots to:

-

Maintain conversational context

-

Match the user’s tone and language

-

Avoid repetitive or suspicious phrasing

This realism removes many cues users once relied on to identify scams.

Why Chatbots Social Engineering Is So Effective

Psychological Factors Behind Chatbot Manipulation

Chatbots exploit several human tendencies:

-

Trust in responsiveness

-

Emotional reinforcement through dialogue

-

Reduced skepticism over time

These dynamics align with principles explained in The Psychology Behind Social Engineering Attacks Conversation builds familiarity, and familiarity builds trust.

Scaling Social Engineering Through Chatbots

Automation allows attackers to scale manipulation dramatically.

With chatbots, attackers can:

-

Run thousands of conversations simultaneously

-

Operate continuously without fatigue

-

Test and refine messaging in real time

This evolution reflects patterns discussed in How AI Is Transforming Social Engineering Attacks

Why Detection Tools Struggle With Chatbot Attacks

Limits of Phishing Detection Against Chatbots

Chatbot-driven social engineering avoids common detection signals:

-

No malicious links early on

-

No malware delivery

-

Unique conversation paths per victim

These characteristics expose limitations described in Phishing Detection Tools Compared

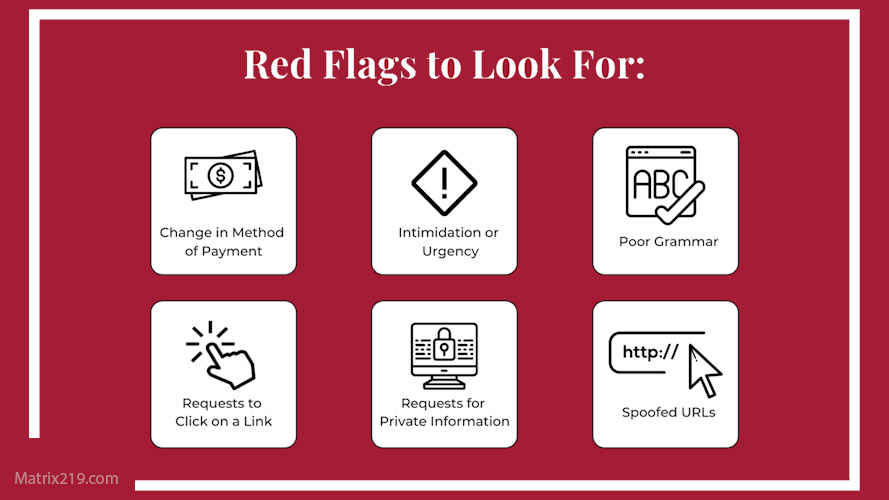

Warning Signs of Chatbots Social Engineering

Red Flags Inside Automated Conversations

Despite realism, some indicators remain:

-

Pressure to move off official platforms

-

Gradual push toward secrecy

-

Requests to bypass verification

-

Avoidance of traceable communication

These overlap with patterns discussed in Common Social Engineering Red Flags Most Users Miss

Phishing Red Flags

How Organizations Can Defend Against Chatbot Manipulation

Effective defense focuses on process, not conversation quality.

Recommended measures:

-

Clearly define official communication channels

-

Require verification for sensitive actions

-

Educate users that chat ≠ trust

-

Monitor unusual interaction patterns

Chatbots should never be treated as proof of legitimacy.

External Perspective on Chatbots and Social Engineering

Cybersecurity research increasingly identifies conversational AI as a growing manipulation vector, as highlighted in ENISA AI Threat Landscape

Frequently Asked Questions (FAQ)

What is chatbots social engineering?

It is the use of automated conversations to manipulate users into unsafe actions.

Are chatbot scams fully automated?

Often partially automated, with humans guiding strategy and escalation.

Can users tell they are talking to a malicious chatbot?

Not reliably, especially when responses are context-aware.

Do chatbot scams always involve money?

No. Many aim to collect data, credentials, or long-term trust first.

What is the best defense against chatbot-based scams?

Verification processes that do not rely on conversation quality.

Conclusion

Chatbots social engineering represents a shift from static deception to interactive manipulation. By sustaining realistic conversations, attackers exploit trust, patience, and emotional engagement over time.

As chat interfaces become standard across digital platforms, awareness must evolve. In an AI-driven threat landscape, conversation itself can be a weapon—and should never replace verification.