Video has long been considered stronger evidence than text or audio. That assumption no longer holds. Deepfake video scams exploit advances in AI-generated video to create realistic visual impersonations that can deceive individuals, employees, and even entire organizations.

These scams do not rely on obvious deception. Instead, they weaponize familiarity, authority, and visual trust. This article explains how deepfake video scams work, why they are effective, and what risks they introduce as video becomes a trusted communication channel.

Quick Navigation

How Video Impersonation Became Possible

AI-driven video synthesis can now recreate faces, expressions, and movements using limited source material.

Attackers can:

-

Generate realistic facial movements

-

Sync lip motion with scripted audio

-

Adjust lighting and angles to avoid detection

This capability lowers the barrier for creating convincing visual forgeries.

Common Scenarios Where Deepfake Videos Are Used

Deepfake video scams often appear in contexts where video already feels normal.

Typical scenarios include:

-

Executives appearing in urgent video calls

-

Managers sending short recorded instructions

-

Public figures endorsing actions or requests

These attacks build on authority-based manipulation discussed in CEO Fraud: How Executives Are Targeted by Phishing but add visual confirmation to increase compliance.

Why Visual Trust Is So Powerful

Humans are wired to trust what they see.

Video creates:

-

Emotional realism

-

Familiar facial cues

-

A sense of authenticity

When combined with urgency, visual trust can override skepticism faster than text or voice alone.

How Deepfake Video Scams Are Executed

Most video-based scams follow a predictable structure:

-

Collect public video footage

-

Train or access a face-generation model

-

Produce a short, targeted clip

-

Deliver it via trusted platforms

The reconnaissance phase often relies on techniques described in How Attackers Profile Victims Using Public Information

Why Detection Is Especially Difficult

Deepfake videos are hard to challenge in real time.

Challenges include:

-

Short video duration

-

Poor video quality hiding artifacts

-

Pressure to respond quickly

Unlike written messages, video discourages pause-and-verify behavior.

The Role of Familiar Platforms

Attackers distribute videos through platforms people already trust.

Examples include:

-

Collaboration tools

-

Messaging apps

-

Internal communication channels

Because delivery occurs inside legitimate ecosystems, technical alarms are rarely triggered.

How These Scams Bypass Traditional Security

Video impersonation avoids most technical indicators.

It succeeds by:

-

Using legitimate accounts or channels

-

Triggering voluntary actions

-

Exploiting process gaps

This aligns with broader patterns explained in How Social Engineering Attacks Bypass Technical Security

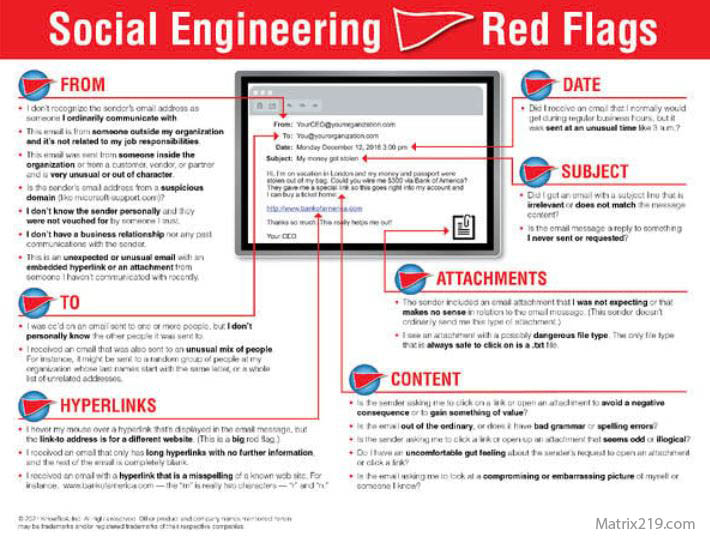

Early Warning Signs to Watch For

Despite realism, some indicators still matter:

-

Requests to bypass standard procedures

-

Unusual secrecy or urgency

-

Refusal to allow independent confirmation

-

Video messages replacing normal approval paths

These overlap with manipulation patterns discussed in Common Social Engineering Red Flags Most Users Miss

Social Engineering Red Flags

Reducing Risk From Video-Based Impersonation

Effective defenses focus on process, not perception.

Key safeguards include:

-

Mandatory out-of-band verification

-

Clear rules for financial or sensitive actions

-

Treating video as untrusted input

-

Training employees on visual impersonation risks

Seeing is no longer believing.

External Perspective on Deepfake Video Abuse

Security and policy research increasingly warns that deepfake video will be used more frequently in fraud and influence operations, as reflected in Europol Online Impersonation Threat Reports

Frequently Asked Questions (FAQ)

Are deepfake video scams common today?

They are emerging rapidly and becoming more accessible.

Can people reliably spot fake videos?

Not consistently, especially in short or low-quality clips.

Do these attacks require advanced skills?

AI tools have significantly reduced technical skill requirements.

Are video calls safer than recorded videos?

Not necessarily. Both can be manipulated or staged.

What is the most reliable defense?

Verification procedures that do not rely on video authenticity.

Conclusion

Deepfake video scams exploit one of the strongest trust signals humans rely on: visual confirmation. By combining realistic imagery with authority and urgency, attackers bypass skepticism and accelerate compliance.

As video becomes a standard communication tool, security strategies must adapt. In an AI-driven landscape, video should be treated as a potential manipulation vector—not proof of identity.