Voice-based scams have existed for years, but recent advances in artificial intelligence have fundamentally changed how they work. Deepfake voice technology allows attackers to replicate real voices with alarming accuracy, transforming traditional phone scams into highly convincing impersonation attacks.

Understanding how vishing is evolving through deepfake voice technology is essential for recognizing modern threats. This article explains how deepfake voice scams work, why they are more effective than traditional vishing, and what risks they introduce for individuals and organizations.

Quick Navigation

How Voice Scams Worked Before AI

Before AI-driven voice cloning, phone scams relied on:

-

Scripts and social pressure

-

Accents or authority cues

-

Limited personalization

While effective, these attacks often failed when victims recognized unfamiliar voices or inconsistencies.

This earlier model is explained in Vishing Attacks: Voice Phishing Scams on the Rise

What Changed With Deepfake Voice Technology

AI-enabled voice synthesis allows attackers to:

-

Clone voices from short audio samples

-

Match tone, pace, and emotion

-

Adapt speech dynamically during calls

This shift removes one of the strongest human verification signals: voice familiarity.

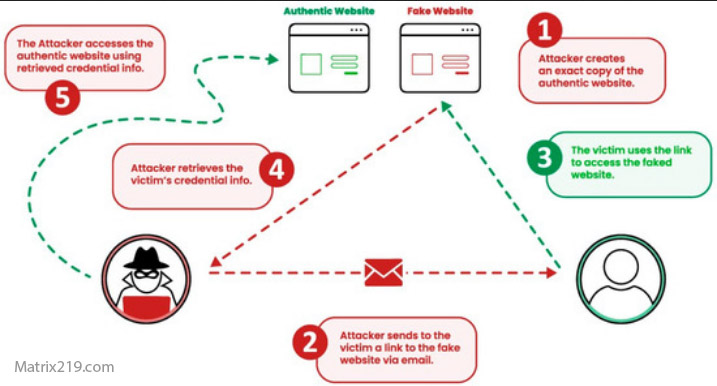

How Attackers Create Deepfake Voice Scams

Deepfake voice scams typically follow a structured process:

-

Collect audio from public sources

-

Train or access a voice cloning model

-

Combine the cloned voice with a scam script

-

Initiate real-time or pre-recorded calls

The profiling stage often relies on techniques described in How Attackers Profile Victims Using Public Information

deepfake voice scams

Why Deepfake Voice Scams Are More Convincing

These attacks succeed because they exploit trust at a deeper level.

Victims often:

-

Recognize the voice

-

Assume identity authenticity

-

Lower verification instincts

This psychological effect is rooted in trust dynamics explained in The Psychology Behind Social Engineering Attacks

Realistic Scenarios Where Deepfake Voice Is Used

Common scenarios include:

-

Executives requesting urgent payments

-

Family members asking for emergency help

-

Managers instructing staff to bypass procedures

These attacks closely resemble patterns seen in CEO Fraud: How Executives Are Targeted by Phishing but add a powerful auditory confirmation layer.

Why Traditional Defenses Struggle With Voice Deepfakes

Most security controls focus on digital indicators, not human perception.

Challenges include:

-

No visual verification

-

Caller ID spoofing

-

Real-time emotional manipulation

This explains why voice-based attacks bypass technical safeguards, as discussed in How Social Engineering Attacks Bypass Technical Security

The Role of Real-Time Interaction

Unlike email or SMS scams, deepfake voice attacks evolve during conversation.

Attackers can:

-

Respond to questions

-

Adjust urgency

-

Escalate pressure

This dynamic interaction increases success rates compared to static messages.

Early Warning Signs of Deepfake Voice Scams

Despite realism, some signals may indicate deception:

-

Requests to bypass normal verification

-

Pressure to act immediately

-

Refusal to allow call-back

-

Unusual secrecy demands

These overlap with indicators discussed in Common Social Engineering Red Flags Most Users Miss

How Organizations Can Reduce Risk

Effective mitigation focuses on process, not voice recognition.

Key measures include:

-

Mandatory call-back procedures

-

Out-of-band verification

-

Clear rules for financial or sensitive requests

-

Training that assumes voice impersonation is possible

Voice should never be treated as proof of identity.

External Perspective on Voice Deepfakes

Cybersecurity authorities increasingly warn that voice cloning is being actively used in fraud and impersonation campaigns, as highlighted in Europol AI-Enabled Fraud Warnings

Frequently Asked Questions (FAQ)

How realistic are deepfake voice scams today?

Very realistic. Short audio samples are often enough to clone a voice.

Are these attacks fully automated?

Sometimes. Many involve AI-generated voices guided by human operators.

Can people reliably detect voice deepfakes?

Not consistently, especially under stress or urgency.

Are voice deepfakes illegal?

Yes. They are commonly used in fraud and impersonation crimes.

What is the best defense against voice-based impersonation?

Verification processes that do not rely on voice alone.

Conclusion

Deepfake voice scams show how vishing is evolving from scripted calls into highly personalized impersonation attacks. By exploiting familiarity and emotional trust, attackers bypass one of the oldest human verification methods.

Defending against this threat requires a shift in mindset: voices can no longer be trusted as identity proof. In an AI-driven landscape, verification must rely on process, not perception.