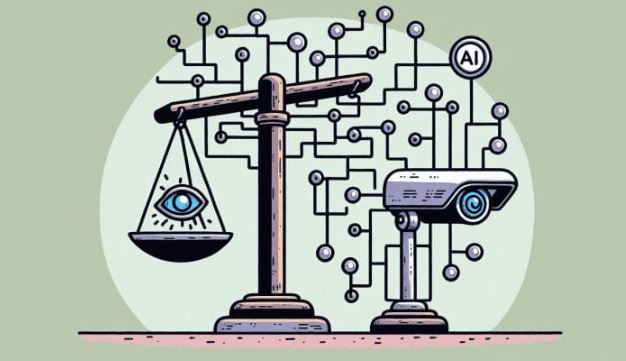

AI-powered surveillance technology uses computer vision, facial recognition, and machine learning algorithms to monitor public and private spaces. While it enhances security and operational efficiency, it raises significant ethical concerns regarding privacy, fairness, and accountability.

Key Ethical Implications

1. Privacy Concerns

AI surveillance can collect and analyze massive amounts of personal data, often without consent, potentially violating privacy rights and personal freedoms.

2. Bias and Discrimination

Facial recognition and AI models may misidentify individuals, especially minorities, leading to unfair treatment, wrongful accusations, or discriminatory practices.

3. Transparency and Accountability

AI-driven surveillance decisions are often opaque. Lack of explainability makes it difficult to hold organizations accountable for misuse or errors.

4. Chilling Effect on Society

Continuous monitoring can influence behavior, limiting freedom of expression and creating a culture of self-censorship in public spaces.

5. Legal and Regulatory Challenges

Existing laws may not fully address AI surveillance technologies, creating gaps in enforcement and compliance across regions.

Strategies for Ethical AI Surveillance

-

Data Minimization: Collect only essential data and anonymize when possible.

-

Bias Mitigation: Test AI systems for fairness and correct discriminatory patterns.

-

Transparent Policies: Clearly communicate how AI surveillance is used and monitored.

-

Oversight Mechanisms: Implement human oversight and accountability frameworks.

-

Legal Compliance: Follow privacy regulations and obtain necessary consents.

Conclusion

AI surveillance offers powerful tools for security and operational efficiency, but ethical challenges such as privacy invasion, bias, and lack of transparency must be addressed. Responsible deployment ensures AI systems enhance safety while respecting individual rights and societal values.