The future of social engineering attacks is being shaped by artificial intelligence, data abundance, and changing digital behavior. As communication becomes faster and more automated, manipulation techniques are evolving to feel more natural, personal, and credible than ever before.

Rather than relying on obvious tricks, future social engineering attacks will blend seamlessly into everyday workflows, platforms, and conversations. This article explores how social engineering is likely to evolve, what trends are already visible, and what this means for individuals and organizations preparing for what comes next.

Quick Navigation

From Isolated Scams to Continuous Manipulation

Future attacks will not look like one-off messages.

Instead, social engineering will:

-

Unfold over longer periods

-

Use multiple channels and touchpoints

-

Adapt based on victim responses

This continuous engagement model increases trust gradually rather than forcing quick decisions.

AI Will Accelerate Personalization and Timing

Artificial intelligence will continue to refine how attackers choose targets and moments.

Future attackers will:

-

Predict optimal timing for contact

-

Adjust tone based on emotional signals

-

Personalize messages without manual effort

These capabilities extend trends discussed in How AI Is Transforming Social Engineering Attacks and reduce the need for guesswork entirely.

Identity Abuse Will Become More Convincing

Impersonation will grow more sophisticated.

Expect:

-

More realistic voice and video impersonation

-

Behavioral imitation across platforms

-

Blended use of text, audio, and video

These developments build on patterns seen in AI-Driven Impersonation Scams Explained and will challenge traditional verification assumptions.

Trusted Platforms Will Remain Primary Attack Vectors

Future social engineering will increasingly occur inside legitimate ecosystems.

Attackers will abuse:

-

Collaboration tools

-

Cloud services

-

Messaging platforms

-

Identity and authorization systems

Because these platforms are trusted, attacks will feel routine rather than suspicious.

Detection Will Shift From Content to Context

As message quality improves, content-based detection will lose effectiveness.

Defensive focus will move toward:

-

Behavioral context

-

Process deviations

-

Unusual request patterns

This shift reflects limitations discussed in Phishing Detection Tools Compared and requires rethinking how alerts are prioritized.

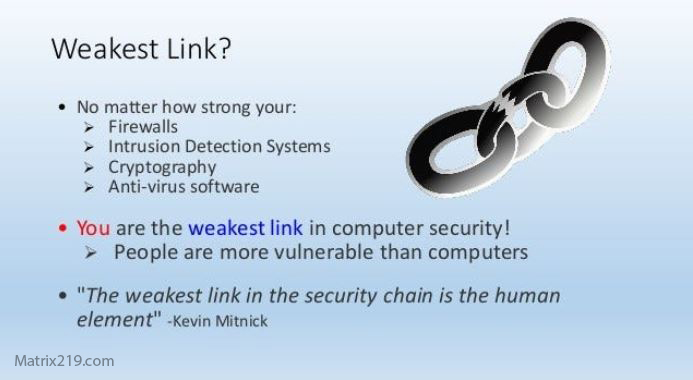

Human Decision-Making Will Remain Central

Despite automation, humans will still make final decisions.

Future risk will depend on:

-

How much authority is delegated digitally

-

Whether verification is required

-

How pressure and urgency are handled

These factors reinforce insights explained in Why Humans Are the Weakest Link in Cybersecurity—not as a flaw, but as a responsibility point.

why humans are the weakest link in cybersecurity

The Line Between Fraud and Social Engineering Will Blur

As manipulation becomes more adaptive, distinctions between scam types will fade.

Future attacks may:

-

Combine fraud, phishing, and impersonation

-

Transition smoothly between techniques

-

Escalate only after trust is established

This blending will complicate classification and response.

Why Awareness Alone Will Not Be Enough

Training will remain important, but insufficient by itself.

Future defenses must:

-

Assume users will make mistakes

-

Design processes that limit damage

-

Enforce verification regardless of confidence

Awareness supports defense—but structure sustains it.

Preparing for the Next Phase of Social Engineering

Organizations should prepare by:

-

Reducing implicit trust in digital identity

-

Formalizing verification for sensitive actions

-

Limiting public exposure of internal details

-

Treating AI as both a threat and a tool

Preparation is about resilience, not prediction.

External Perspective on Emerging Trends

Cybersecurity research increasingly frames social engineering as an adaptive, long-term threat rather than a solvable technical problem, as reflected in World Economic Forum Cyber Risk Outlook

Frequently Asked Questions (FAQ)

Will social engineering attacks replace technical attacks?

No. They will increasingly complement them.

Will AI make social engineering unstoppable?

No. It increases scale and realism, not inevitability.

Are future attacks likely to be fully automated?

Some will be, but humans will still guide strategy.

Can organizations prepare effectively?

Yes, by focusing on verification and process design.

What will matter most in defense?

Structure, accountability, and decision discipline.

Conclusion

The future of social engineering attacks is not defined by a single technology, but by how manipulation adapts to trust, automation, and routine. As attacks become more natural and less visible, reliance on intuition alone will become increasingly risky.

Defending against what comes next requires accepting that deception will evolve—and designing systems that assume manipulation, enforce verification, and limit the impact of human error.