Personalized phishing using AI and data leaks has become one of the most effective manipulation techniques in modern cybercrime. By combining artificial intelligence with leaked personal data, attackers craft messages that feel relevant, timely, and trustworthy—often indistinguishable from legitimate communication.

Unlike generic phishing, these attacks leverage real details about the victim to increase credibility and response rates. Understanding how personalized phishing works, where the data comes from, and why AI amplifies its impact is essential for reducing risk in today’s digital environment.

Quick Navigation

What Is Personalized Phishing Using AI and Data Leaks?

Personalized phishing refers to attacks that tailor messages to a specific individual using personal or contextual information. When combined with AI, this personalization scales efficiently and adapts in real time.

Attackers use personalization to:

-

Reference real names, roles, or relationships

-

Align messages with recent activities

-

Match tone and timing to the victim’s context

This approach replaces guesswork with precision.

How Data Leaks Fuel Personalized Phishing

Sources of Data Used in Targeted Attacks

Leaked data provides the raw material attackers need. Common sources include:

-

Breached databases from past incidents

-

Exposed customer records

-

Public profiles and social media

-

Data brokers and scraped datasets

Even small details can dramatically increase believability.

These reconnaissance techniques align with methods discussed in How Attackers Profile Victims Using Public Information

The Role of AI in Scaling Personalization

AI removes the manual effort traditionally required to tailor messages.

With AI, attackers can:

-

Analyze large datasets quickly

-

Identify relevant personal details

-

Generate customized messages at scale

This turns targeted phishing from a high-effort tactic into a repeatable process.

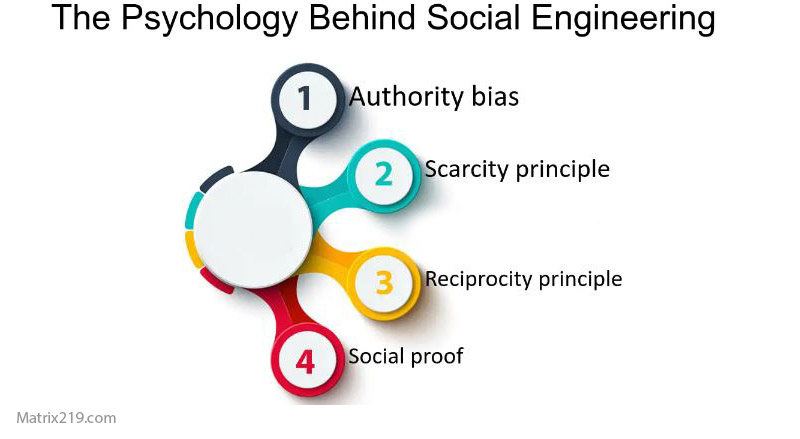

Why Personalized Messages Are Harder to Ignore

Personalized phishing succeeds because it feels familiar.

Victims are more likely to respond when messages:

-

Reference known colleagues or vendors

-

Match internal language or workflows

-

Arrive at expected times

These psychological effects are rooted in trust dynamics explained in The Psychology Behind Social Engineering Attacks

psychology behind social engineering attacks

Common Scenarios for Personalized Phishing Attacks

Personalized phishing appears across many contexts, including:

-

Workplace requests from “known” contacts

-

Account alerts referencing recent activity

-

Financial messages tied to real transactions

-

Support messages mentioning specific services

These scenarios reduce suspicion and shorten decision time.

How AI Improves Message Quality and Timing

Beyond content, AI optimizes delivery.

Attackers use AI to:

-

Choose optimal sending times

-

Adjust urgency based on engagement

-

Refine language after each interaction

This adaptability mirrors broader trends discussed in How AI Is Transforming Social Engineering Attacks

Why Detection Tools Struggle With Personalized Phishing

Traditional detection relies on repetition and known patterns.

Personalized phishing avoids those signals by:

-

Generating unique messages per target

-

Using legitimate platforms and domains

-

Avoiding malware or suspicious attachments

These limitations are explored further in Phishing Detection Tools Compared

Human Factors That Increase Risk

When messages feel personal, users rely on intuition.

Risk increases due to:

-

Trust in familiarity

-

Reduced verification under pressure

-

Cognitive overload and routine behavior

These factors reinforce why humans remain central to security outcomes.

Warning Signs That Personalization Is Being Abused

Red Flags in Highly Tailored Messages

Even convincing messages may show subtle indicators:

-

Requests to bypass normal processes

-

Pressure to keep actions confidential

-

Inconsistencies in tone or context

-

Urgent actions outside routine workflows

These overlap with signals discussed in Common Social Engineering Red Flags Most Users Miss

How Organizations Can Reduce Exposure

Effective defense focuses on process and verification.

Key measures include:

-

Limiting public exposure of employee data

-

Enforcing verification for sensitive requests

-

Monitoring unusual access or approvals

-

Educating users about targeted manipulation

Personalization should never replace confirmation.

External Perspective on AI-Driven Personalized Phishing

Security research consistently highlights the combination of AI and leaked data as a major driver of modern phishing effectiveness, as reflected in Verizon Data Breach Investigations Report

Frequently Asked Questions (FAQ)

What makes personalized phishing more dangerous?

It uses real personal details, which increases trust and response rates.

Does personalized phishing always use AI?

Not always, but AI makes personalization faster and more scalable.

Can data leaks years old still be used?

Yes. Old data is often reused and combined with new information.

Are organizations or individuals more at risk?

Both. Organizations face higher impact due to access and authority.

What is the most effective defense?

Strong verification processes and reduced data exposure.

Conclusion

Personalized phishing using AI and data leaks represents a shift from broad deception to precise manipulation. By combining real data with adaptive messaging, attackers create scenarios that feel legitimate and urgent.

Defending against this threat requires acknowledging that relevance is no longer a sign of safety. In a data-rich environment, verification—not familiarity—must guide decisions.