How organizations can prepare for AI-based social engineering has become a practical question rather than a theoretical one. As attackers use AI to personalize manipulation, imitate identities, and automate deception, organizations must assume that traditional awareness and perimeter defenses are no longer sufficient.

Preparation today is less about predicting specific attacks and more about designing systems that remain resilient when manipulation succeeds. This article explains how organizations can realistically prepare for AI-based social engineering by focusing on process, verification, and human-centered controls.

Quick Navigation

Accepting That AI-Based Social Engineering Will Succeed

The first step in preparation is mindset.

Organizations must accept that:

-

Some phishing and impersonation attempts will bypass filters

-

Some employees will interact with deceptive messages

-

AI will make attacks more convincing over time

Preparation starts when defenses are built for failure, not perfection.

Shifting Focus From Detection to Damage Control

Detection remains important, but it is no longer enough.

Effective preparation prioritizes:

-

Limiting what a single action can authorize

-

Reducing the blast radius of mistakes

-

Preventing one decision from triggering major impact

This shift reflects limitations discussed in Phishing Detection Tools Compared and reframes security as containment rather than prevention.

Redesigning Processes That Assume Trust

Many business workflows assume that identity equals legitimacy.

AI-based social engineering exploits:

-

Authority-based approvals

-

Informal requests

-

Unverified urgency

Organizations should redesign processes so that:

-

No single message can authorize sensitive actions

-

Identity claims always require verification

-

Speed never overrides validation

Making Verification Non-Negotiable

Verification is the strongest defense against manipulation.

Effective verification includes:

-

Mandatory call-back or out-of-band checks

-

Multi-person approval for high-risk actions

-

Clear rules for exceptions and escalation

These controls neutralize threats described in AI-Driven Impersonation Scams Explained regardless of how realistic the impersonation appears.

Reducing Public Exposure of Organizational Data

AI-based attacks improve as data availability increases.

Organizations should:

-

Minimize public employee role details

-

Limit exposure of internal structure

-

Review what is shared on social platforms

This reduces the effectiveness of targeting techniques discussed in How Attackers Profile Victims Using Public Information and increases attacker uncertainty.

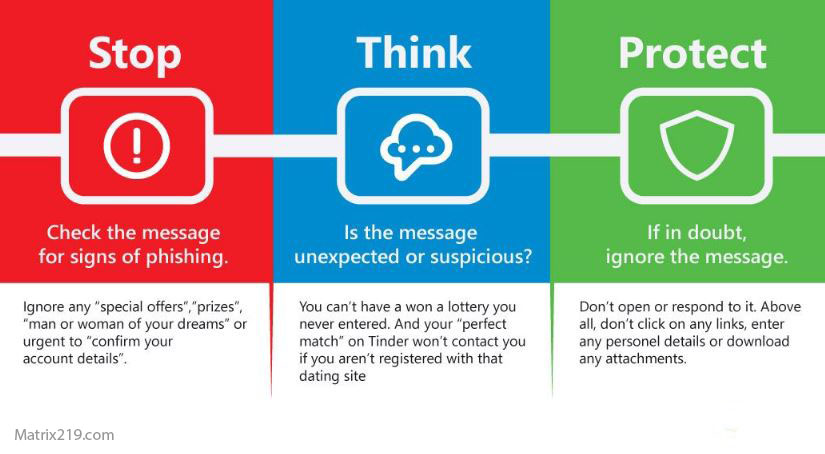

Updating Security Training for AI-Driven Threats

Traditional awareness training focuses on obvious scams.

Modern training must:

-

Include realistic AI-powered scenarios

-

Emphasize verification over intuition

-

Teach that professionalism does not equal legitimacy

Training should reflect how AI removes classic red flags rather than reinforce outdated ones.

Supporting Employees Instead of Blaming Them

Punitive cultures discourage reporting.

Prepared organizations:

-

Encourage early reporting without fear

-

Treat interaction as a signal, not a failure

-

Use incidents to improve process

This approach aligns with guidance discussed in How to Report a Phishing Attack Properly and increases visibility across the organization.

how organizations can prepare for AI-based social engineering

Using AI Defensively Without Over-Reliance

AI can strengthen preparation when used correctly.

Defensive uses include:

-

Highlighting unusual behavior

-

Reducing alert fatigue

-

Supporting investigation and triage

However, AI should assist decisions—not replace accountability, as discussed in Can AI Defend Against Social Engineering Attacks?

Testing Organizational Resilience Regularly

Preparation requires validation.

Organizations should:

-

Run simulated AI-based attacks

-

Test verification workflows under pressure

-

Measure response speed and clarity

These exercises reveal gaps that policies alone cannot expose.

Treating Identity as Untrusted Input

The most important preparation shift is conceptual.

Organizations must treat:

-

Emails, calls, chats, and videos

-

Authority claims and familiar identities

as untrusted input by default.

Verification—not recognition—should guide every sensitive decision.

External Perspective on Organizational Preparedness

Cybersecurity frameworks increasingly emphasize resilience, verification, and human-centered controls as core defenses against manipulation-driven threats, as reflected in NIST Human-Centered Cybersecurity Guidance

Frequently Asked Questions (FAQ)

Can organizations fully prevent AI-based social engineering?

No. Preparation focuses on limiting impact, not stopping every attempt.

Is verification more important than detection?

Yes. Verification stops manipulation even when detection fails.

Should organizations reduce employee autonomy?

No. They should reduce risk concentration, not trust in people.

Does AI make preparation harder?

It changes the threat, but strong process still works.

What is the single most important preparation step?

Designing workflows that do not rely on trust alone.

Conclusion

How organizations can prepare for AI-based social engineering is ultimately a question of design, not technology. AI amplifies deception, but it does not override well-structured processes, mandatory verification, and clear accountability.

The organizations that succeed will not be those with the most advanced tools—but those that assume manipulation is inevitable and design systems that remain safe when humans are targeted.