Email scams have evolved dramatically with the rise of generative AI. Messages that once contained obvious grammar mistakes and awkward phrasing are now polished, contextual, and tailored to the recipient. As a result, many users—and security tools—struggle to tell legitimate messages from malicious ones.

This article explains why AI-generated phishing emails are harder to detect, how attackers use AI to improve realism and targeting, and what this shift means for defenses that relied on spotting familiar red flags.

Quick Navigation

From Generic Messages to Contextual Emails

Earlier phishing campaigns depended on scale rather than accuracy. AI has flipped that equation.

Attackers now generate emails that:

-

Match professional tone and role

-

Reference real tools or workflows

-

Adapt language to region and industry

This evolution removes many cues users were trained to distrust.

Language Quality No Longer Reveals Intent

One of the biggest changes is linguistic quality.

AI-written emails typically:

-

Use correct grammar and spelling

-

Maintain consistent tone throughout

-

Avoid exaggerated urgency language

Because language quality was a common detection signal, its disappearance makes manual review less reliable.

Personalization at Scale

AI allows attackers to personalize messages automatically rather than selectively.

Personalization may include:

-

Job titles and departments

-

Recent projects or events

-

Familiar collaborators or vendors

This scale builds on profiling techniques described in How Attackers Profile Victims Using Public Information but removes the time cost that once limited targeting.

Adaptive Content During Active Campaigns

AI enables rapid iteration.

During a campaign, attackers can:

-

Adjust phrasing based on responses

-

Swap themes that trigger engagement

-

Localize content instantly

This adaptability reduces repetition and weakens pattern-based detection.

Why Traditional Filters Struggle

Many email security tools rely on known indicators.

Challenges include:

-

Unique content per recipient

-

Legitimate hosting and links

-

Lack of malware or attachments

These traits mirror limitations discussed in Phishing Detection Tools Compared and explain why clean-looking messages still succeed.

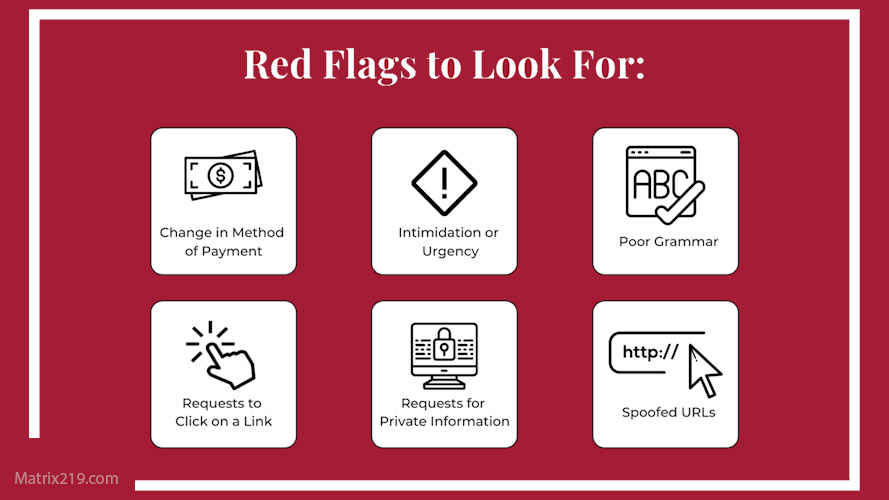

The Disappearance of Familiar Red Flags

Classic warning signs—poor grammar, generic greetings, odd formatting—are increasingly absent.

Users now face emails that:

-

Look routine

-

Sound professional

-

Fit normal workflows

This raises the risk of reflexive action, especially under time pressure.

Phishing Red Flags

Human Factors Under AI-Driven Messaging

When emails feel relevant and well-written, recipients rely on intuition rather than verification.

AI does not exploit systems first; it exploits:

-

Trust in routine communication

-

Cognitive shortcuts

-

Fatigue and multitasking

These dynamics reinforce human-centric risks explained in Why Humans Are the Weakest Link in Cybersecurity

Why Detection Must Shift From Content to Context

Because content quality is no longer a giveaway, defenses must emphasize context.

More reliable signals include:

-

Unusual timing or requests

-

Deviations from established process

-

Requests to bypass verification

This approach aligns with the need to design systems that anticipate manipulation rather than perfect behavior.

External Perspective on AI-Written Email Threats

Security research increasingly notes that generative AI improves attack realism faster than defensive heuristics can adapt, a trend reflected in ENISA AI and Cybersecurity Threat Landscape

Frequently Asked Questions (FAQ)

Are AI-generated phishing emails always personalized?

Not always, but personalization is now cheap enough to be common.

Can users spot AI-written emails by style alone?

Rarely. Style and grammar are no longer reliable indicators.

Do AI emails bypass email security completely?

No, but they reduce the effectiveness of content-based filters.

Is AI used to send emails automatically?

Often AI generates content while humans control timing and targets.

What is the best defense against realistic phishing emails?

Verification of requests and strong process controls.

Conclusion

AI-generated phishing emails are harder to detect because they remove the very flaws users and tools relied on for years. By producing polished, contextual, and adaptive messages, attackers shift the challenge from spotting mistakes to verifying intent.

Defending against this evolution requires a focus on context, process, and verification—not assumptions about how malicious emails should look.